Breaking into the (Digital) BitBox

In this post, I am going to discuss the security issues I discovered in a hardware wallet known as BitBox, formerly known as “Digital Bitbox”. It is important to note that I have not fully audited the device, and these issues were found from a preliminary look at the device.

Note that, while I intended to quote BitBox’s own descriptions of the fixes, they denied me permission to do so. However, they assure me that they will be publishing their own report which I trust will help fill in the gaps.

What is a hardware wallet?

As cryptocurrencies, such as Bitcoin, increase in popularity, those who use them become targets. The lack of a central authority in cryptocurrencies (where public key cryptography is used to prove ownership of funds) means that – unlike conventional banking, whereby deposits are insured and fraudulent transactions can be reversed – users are at risk of theft with impunity.

Simply put, to make an (irreversible!) cryptocurrency transaction, all you need to do is create a digital signature with your private key.

As such, it is essential for users to protect their private keys. One method by which private keys can be stolen is malware having infected the computer used to interact with them, even if they are encrypted while not in use.

Hardware wallets attempt to rectify this issue by storing the private key on a discrete piece of hardware connected to the computer, which then performs cryptographic operations with the key. In the absence of a security vulnerability, this prevents malware from stealing the private key.

Furthermore, users desire protection against physical access to the hardware wallet, in case the device itself is lost/stolen. Passphrase or PIN protection alone is not enough – the hardware wallet must be tamper-resistant so it is unattainably expensive for most attackers to perform a physical attack to extract the keys from the microcontroller.

But why not just use a hardware security module (HSM) – a tamper-resistant physical device for managing cryptographic keys, that existed long before the invention of the hardware wallet?

Well, one issue faced by generic HSMs is that – even though an attacker cannot extract the actual private key – they can still ask it to sign arbitrary data. It’s no use protecting your private key from malware if it can still ask your wallet to sign a transaction sending all your life savings to a cybercriminal!

Thus, it is necessary for the hardware wallet to display to the user the details of the transaction and require user confirmation before signing it – in a way that cannot be spoofed by malware.

Correspondingly, hardware wallets differ from HSMs in that they must also include a secure physical display and confirmation button, as well as being able to parse the data to sign (so that they can display the transaction information without risk of it being spoofed).

The BitBox approach

Immediately, the BitBox fails to meet our definition of a hardware wallet. While the physical touch button for confirmation present on the device merely eludes photography, there is obviously no secure display to be found.

Before we dismiss this device as a hardware wallet, we must take a look under the surface. Despite the mundane appearance of a glorified MicroSD reader, beneath the matte black plastic lives an ATSAM4S4A microcontroller. This is paired with a tamper-resistant storage chip, known as the ATAES132A. We can thus conclude that this device might be able to satisfy the requirements for a portable HSM.

But to realize its potential as a hardware wallet, we must take a look at the alternative approach employed by BitBox.

Instead of using a secure display found on the device, the BitBox can display transaction information on a paired smartphone, where the user must additionally approve or reject it before the device will create a digital signature.

The assumption is that the risk of the same attacker having compromised both your computer and smartphone when you decide to make a transaction (since you must also confirm the transaction with the touch button on the BitBox itself), is acceptably low for most users.

While this threat model is subject to debate, for the remainder of this post, I am going to assume it is valid.

In summary, to break the security of the BitBox, we must be able to do any of the following:

-

Steal private keys from the device via malware

-

Sign a different transaction to the one shown on the paired smartphone

-

Extract private keys from the device with physical access, without knowing the passphrase

In this post, I am going to demonstrate how to do all three.

Unauthenticated encryption as authentication

As I will cover in more detail later on, the BitBox makes heavy usage of encryption. Specifically, the AES-256-CBC cipher.

While this is a good choice for its confidentiality guarantees, it provides neither integrity nor authenticity guarantees.

Repeat after me: encryption is not authentication!

This misunderstanding usually manifests as mistakenly relying on encrypted ciphertext to prove that someone knows the encryption key.

Unfortunately, this is exactly how BitBox validates that the user knows the passphrase. This means that, if an attacker can obtain any ciphertext encrypted with the passphrase (e.g. if they are snooping on the USB bus), they can forge another message by taking advantage of the way CBC works, and the lack of authentication.

To explain briefly: AES-256-CBC is an encryption method that uses the AES cipher, with a 256-bit key, and the CBC (cipher block chaining) mode of operation. In order to encrypt anything with AES, you must split the plaintext into 16 byte blocks that are encrypted individually and then concatenated to produce the ciphertext.

Unfortunately, this is dangerous because encrypting the same plaintext block with the same key results in the same ciphertext block. To prevent this, we use different modes of operation. The “cipher block chaining” mode works by XORing the previous ciphertext block into the plaintext before encrypting it. The nature of the XOR operation is such that doing the same after decrypting the ciphertext block will return the original plaintext.

This works well for all blocks except the first, of course, since there is no previous ciphertext block. As such, every time we want to encrypt something, we generate a random 16 byte block, known as the IV (initialization vector), and prepend it to the ciphertext. As long as we ensure this IV is unique, we should never end up encrypting the same plaintext to the same ciphertext.

However, this is vulnerable to a malleability attack where an attacker is able to change part of the plaintext without knowing the key. If we take a block of ciphertext where the plaintext is known, we can actually modify its plaintext by modifying the previous ciphertext block (since this is XORed into the plaintext after decrypting it). Unfortunately, this will corrupt the previous block and the attacker cannot tell what it will decrypt to. However, since the IV is never decrypted, this means the attacker can reliably modify the first block of the plaintext. The attacker can also carefully modify other blocks if they know that the previous block decrypting to 16 bytes of garbage will not matter.

One example where this is an issue is for users of Qubes OS, where each application is isolated from others in a separate virtual machine. This includes untrusted “USB VMs” whose role is to communicate with USB devices on behalf of other virtual machines.

The hypothesis is that you are protected from a USB stack exploit, since malicious USB device could only gain control of your USB VM. Since you enter your BitBox passphrase into a different VM where your wallet software is running, malware in your USB VM wouldn’t be able to do anything harmful as it doesn’t know the passphrase and thus cannot modify the messages being sent between the BitBox and the wallet software. All it could do is prevent messages from being transmitted, or try and trick you into factory resetting the device.

However, this hypothesis is actually false! Due to the lack of authentication, the USB VM could actually manipulate the ciphertext to change the messages being sent to the BitBox – even though it doesn’t know the passphrase!

This is not an issue if you use authenticated encryption (such as AES-GCM, or ChaCha20/Poly1305), or include a message authentication code (MAC).

Nevertheless, I never even bothered trying to exploit this vulnerability as there were far more trivial ways to break the security of the various components that misused encryption in this manner.

However, despite the security assumptions that users make being broken (for example, from a Twitter discussion about hardware wallets and Qubes), BitBox deemed this to not be a security issue and refused to reward it with a bounty.

It’s understandable that a method of exploiting is not immediately obvious, but I am very unsatisfied with BitBox’s conclusion on this matter.

Bizarrely, despite implementing a MAC following my disclosure, BitBox decided not to use the implementation for encrypting data stored on the device, or USB communications.

Regardless, I haven’t reviewed their implementation at all and cannot vouch for its security in any way.

Private keys in plain sight

The first vulnerability I am going to discuss, albeit not the first I discovered, is how malware can steal private keys from the device.

This attack requires the user to make use of the “hidden wallet” feature. As I mentioned earlier, cryptocurrency users are targets – not just for cyberattacks, but also for physical altercations. As such, the BitBox includes a feature whereby you can segregate your funds in two wallets with different passphrases. The rationale is that you can give the hidden passphrase to your assailant and they can take the substantially lesser contents of that wallet – while leaving your life savings intact.

Arguments about flawed technical solutions to a non-technical problem aside, it’s important to note that I found a number of issues whereby a knowledgable attacker could determine whether this functionality is in use (thus defeating the purpose). So I wouldn’t exactly recommend you use it.

Regardless, I am going to discuss an even deeper flaw that enables malware to steal your private keys with no additional user interaction, provided the hidden wallet functionality is used on that computer.

Understanding BIP-32

If you’ve ever used Bitcoin before, you’ll know that a Bitcoin wallet will generate multiple addresses. This is done for a number of reasons – for example, to provide a very small amount of financial privacy because your transactions don’t all occur within the same address. Since each of these addresses has a separate private key, a user needs to keep making regular backups, because their old backups won’t include the newly generated addresses.

As you might imagine, it is very tedious to make backups after every transaction, and early Bitcoin wallets used the ineffective workaround of pre-generating a pool of private keys.

However, a Bitcoin developer designed a system to resolve this, known as BIP-32. The idea is that you can deterministically generate an unlimited amount of private keys from a single master private key. This means you only ever have to backup the master private key – thus resolving the issue described above.

Additionally, the standard describes how you can deterministically generate the public keys if you have the master public key. This is useful for a hardware wallet because you can give the computer the master public key and it can generate all the public keys for the wallet – without ever knowing the private keys.

The mechanics of BIP-32 rely on an understanding of elliptic-curve cryptography, so we will not cover them in this post. But the high-level overview is that a BIP-32 private key consists of a Bitcoin private key, and a “chaincode”. The public key counterpart consists of the complementary Bitcoin public key, and the same chaincode as its private key counterpart.

Domain separation

So, we know that a BIP-32 key consists of a Bitcoin key and a mysterious chaincode. Disappointingly, the chaincode is just a random 32-byte blob which seemingly serves no purpose – BIP-32 could be implemented without it.

But why do we use it? The answer is simple: domain separation. While we could re-use an existing Bitcoin private key for BIP-32, this is a terrible idea. If the BIP-32 scheme is broken, we’ve compromised our existing funds. And, if the Bitcoin private key we re-used is compromised somehow, we’ve also compromised the BIP-32 child keys!

Thus, we use our chaincode to prevent this harmful key reuse (rather, misuse!).

But, it just so happens that a private key is also a random 32-byte blob. So, if you’re an irresponsible maverick with no regard for domain separation, you might ask yourself, “Why can’t I use a private key as a chain code, or vice versa?”

If you take away one thing from this article, it should be that you should never ever do this! Do not even think about it!

Unfortunately, this is exactly what BitBox did!

uint8_t *wallet_get_master(void)

{

if (HIDDEN) {

return memory_master_hww_chaincode(NULL);

} else {

return memory_master_hww(NULL);

}

}

uint8_t *wallet_get_chaincode(void)

{

if (HIDDEN) {

return memory_master_hww(NULL);

} else {

return memory_master_hww_chaincode(NULL);

}

}

The rationale for this eludes me, but BitBox chose to create the master private key for its hidden wallet by flipping around the private key and chain code from the normal wallet.

Disastrously, this means that requesting the master public key for the normal wallet will not only give us the normal wallet chain code, but the private key for the hidden wallet. Vice versa, requesting the master public key for the hidden wallet will also give us the private key for the normal wallet.

To obtain the master public key for a wallet, we need to know the passphrase – but do not require confirmation on the device. As such, if you have ever entered the normal passphrase and hidden passphrase on a computer, malware can steal your master private keys without user confirmation!

BitBox’s fix

BitBox informed me that they fixed this by deriving the hidden wallet using the BIP-39 passphrase feature. Assuming this is implemented correctly, it is an ideal solution as it also strengthens the plausible deniability (since obtaining the master private key for the normal wallet will not give you the hidden wallet). Nevertheless, the fact that the hidden wallet is stored on-device means this is still not perfect plausible deniability and should be used with caution.

Additionally, as a band-aid, it is no longer possible to request the master public key, which should prevent the described method of extracting the private keys.

Cracking the two-factor authentication

The next attack we are going to look at is breaking the “two-factor authentication” – the requirement that transactions be confirmed on a paired smartphone (the “second factor”). Rather than parsing the transaction on the hardware wallet, BitBox takes the approach of blindly signing arbitrary data on the hardware wallet – relying solely on the smartphone app to parse and display the transaction details.

The approach taken by the BitBox is to pair the device with a smartphone by exchanging an encryption key. Subsequently, the BitBox and smartphone can encrypt messages to each other, and send them to one another through a potentially malicious computer – without allowing that computer to snoop on the messages!

Sounds great! Except for the fact that, as I mentioned earlier, encryption is not authentication so the computer can still do Bad Things™ to the encrypted messages – but let’s ignore this fatal issue, because there’s another problem.

How do you exchange the encryption key? Since the BitBox has no display, and only a single button, the key cannot be exchanged without passing through the potentially malicious computer.

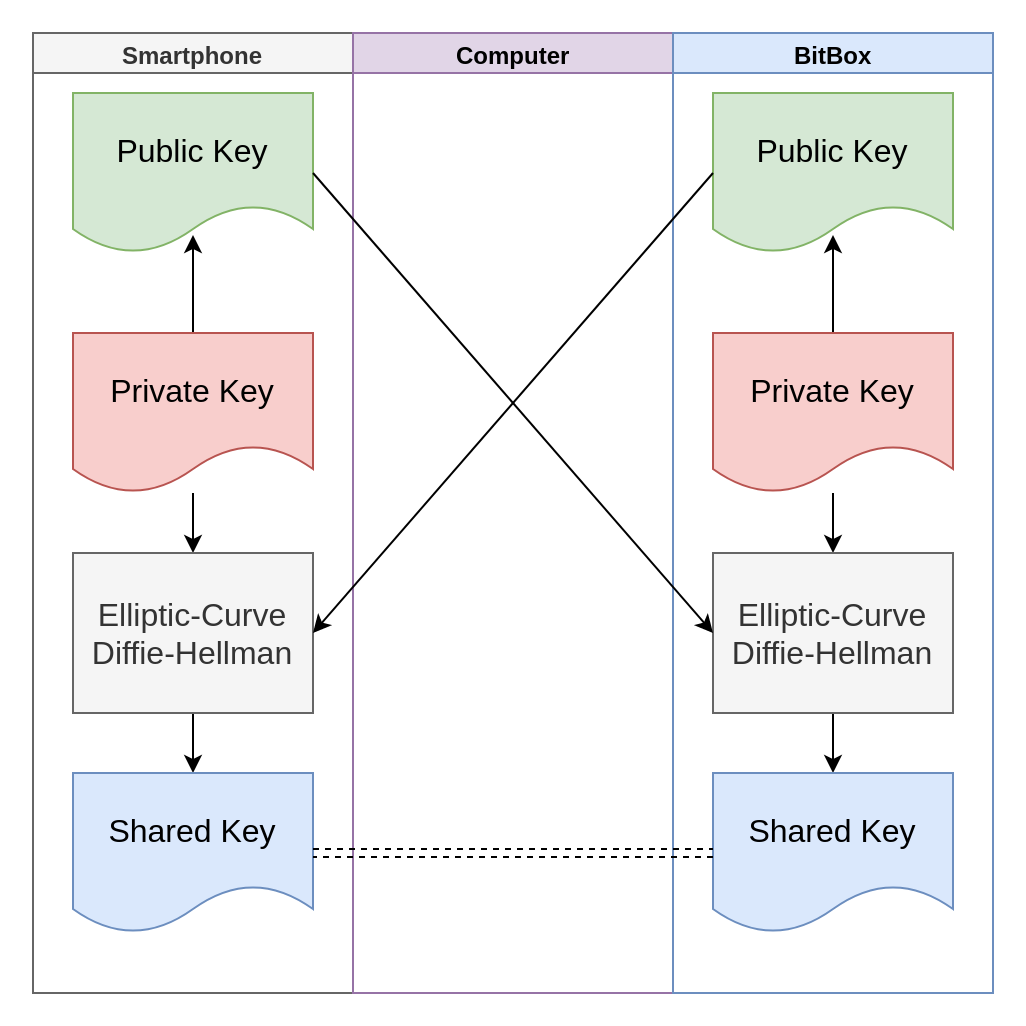

Luckily, there is a type of cryptography that can help here. The BitBox uses a type of “key exchange”, known as an Elliptic-Curve Diffie-Hellman (ECDH). Simply put, if both parties generate their own private key, they can combine it with the other party’s public key to obtain a shared key. Magic!

ECDH is often used in other contexts, such as when you connect to many websites over HTTPS.

As you can see above, only the public keys pass through the computer. Since these cannot be reversed into private keys, there is no way for a passive man-in-the-middle on the computer to obtain the shared encryption key.

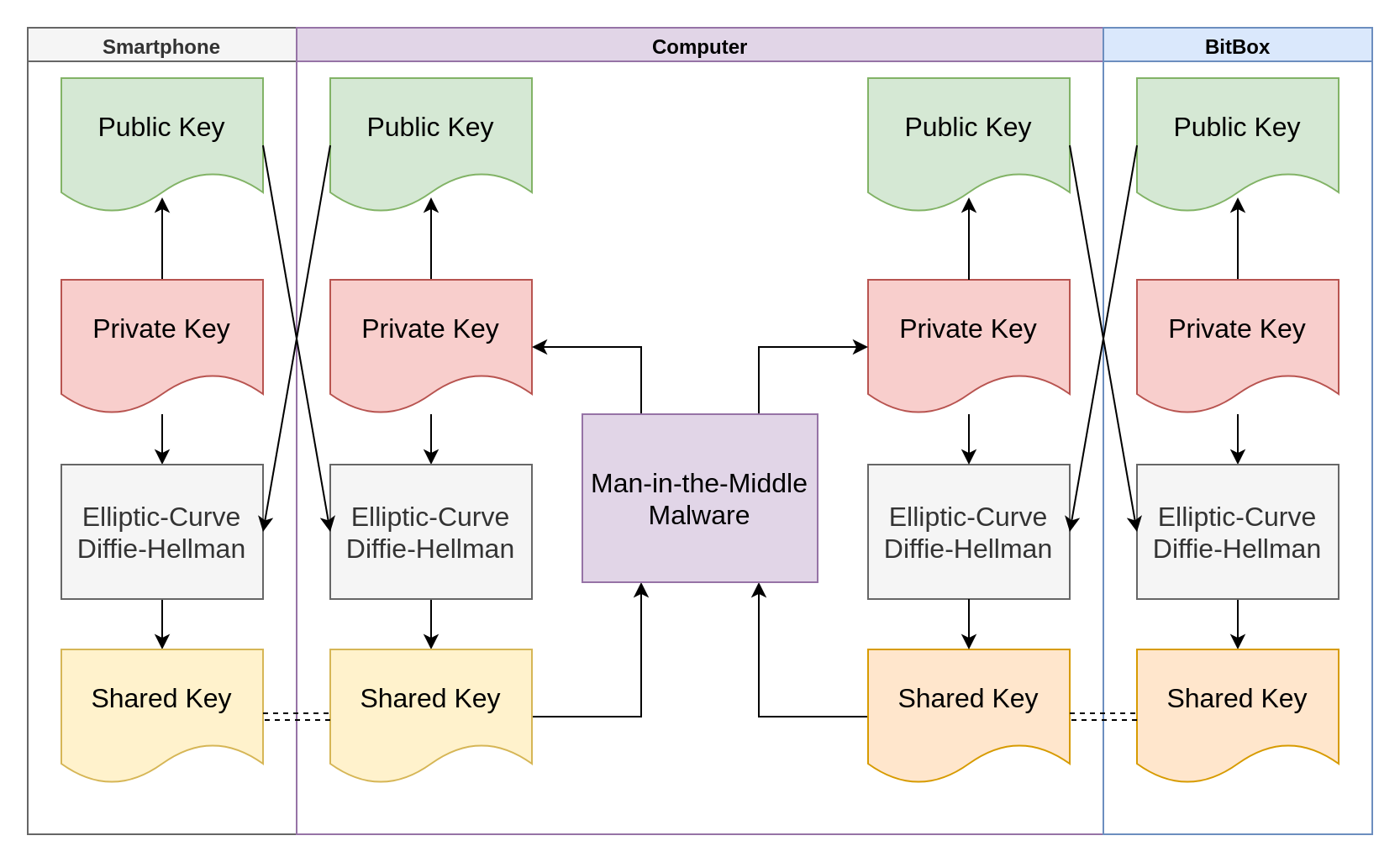

However, what if the computer actively attempts to man-in-the-middle the key exchange?

As you can see above, if the malware can perform its own key exchange with the smartphone. Then it can talk to the smartphone, pretending to be the BitBox, and tell it to display the expected transaction.

Then the malware, having performed its own key exchange with the BitBox, can pretend to be the smartphone, and tell the BitBox that the user confirmed the malicious transaction that turns their life savings into a hacker’s retirement fund.

If you’re expecting me to whip out some more cryptography and magic away this issue, you’re out of luck. You see, the only way to solve this issue is to verify the public keys out-of-band (i.e. not through the attacker-controlled connection). If you try to create trust where there is none, you’re going to have a tough time.

For example, when you connect to websites over HTTPS, the server’s public key is verified using a “chain of trust” built-in to your web browser.

But BitBox took a different approach. When you pair the smartphone, the BitBox blinks out an LED sequence that you enter into your phone. This is used as a salt that is mixed (using the XOR operation) into the final shared key.

In theory, this prevents the active man-in-the-middle attack from obtaining the actual shared key – as it is still missing the out-of-band salt. You may question why BitBox didn’t just choose to transmit the public key through the LED?

Now, I can’t offer a definite explanation for that, because I don’t know. But what I can say is that it is really stressful to perform the LED pairing process.

Essentially, the BitBox slowly flashes the LED, 1 to 4 times. This is known as an “LED set”. You must then enter the number of flashes, from 1 to 4, on the smartphone. This process is then repeated for the required number of LED sets. Even with maximum concentration, you’re susceptible to miscounting and you won’t find out you’ve done this until the very end – at which point, you’ll need to repeat the process. And the resulting pressure caused by this risk only goes to increase the probability you’ll mess up!

But the most painful part of the process is that, for each set, you’re increasing the salt size by a mere 2 bits. That’s all.

Given that the process is so agonizing, you can see why you wouldn’t want to have to perform 132 sets(!) to transmit the public key. In fact, BitBox limited the number of LED sets to 6 sets.

But that gives us a maximum salt size of 12 bits. In other words, an attacker need only try a maximum of 4096 possible salts to obtain the correct one.

The pairing process involves sending (through the malicious computer) an

encrypted message with the known plaintext Digital Bitbox 2FA. This makes it

trivial for the malware to perform an offline brute-force attack on the salt.

My proof-of-concept attack takes under a second to complete on a relatively

inexpensive, consumer-grade laptop.

With the salt trivially obtained, the malware can perform the man-in-the-middle attack described above, completely unimpeded by the obstacles BitBox attempted to put in its way.

BitBox’s fix

I have not yet reviewed their fix for myself, for reasons I discuss at the end of the article. Nevertheless, BitBox assure me they have designed a new pairing protocol that purports to resolve this issue.

Regardless, without studying the implementation, I cannot comment as to whether this is secure. I would advise both BitBox and any future researchers who wish to explore this to especially study whether the code path correctly enforces the expected behaviour, and whether the API flow can be manipulated by a malicious computer to break the assumptions of the user or software.

Stealing private keys without the passphrase

According to a quote in the BitBox FAQ, now removed at my request for reasons that will become obvious, the following protection is employed to protect your private keys:

All secrets (keys and passwords) are stored isolated on a separate high-security chip designed specifically to keep your secrets secret

Unfortunately, the reality doesn’t quite match the claims here. It’s true that there is a “high-security chip” in use (the ATAES132A I mentioned earlier) that does seem to meet the claims.

On the other hand, let me summarize the implementation I actually found in the firmware source code:

-

The non-secure microcontroller stores an AES encryption key in its flash memory

-

The “high-security chip” is configured to disable all security protections on its storage

-

The non-secure microcontroller encrypts the private keys and passphrase with the AES encryption key, and writes them (encoded as Base64 for a reason that eludes me) to the now unprotected storage of the “high-security chip”

This is absolutely mind-boggling to me. The point of a secure chip is that you can store data on it that then requires authentication, in order to read the data back. Furthermore, you can verify the integrity of the data. With this in mind, why would you implement a secure chip in your hardware design, and then disable all the security protections?

As such, in the BitBox, the ATAES132A is a freely accessible EEPROM (i.e. less secure than the flash provided by the “non-secure” microcontroller) and the single point-of-failure is the AES encryption key living in said non-secure microcontroller.

We could definitely stop here, having disproved the claims made by BitBox. But if we dig deeper, we can discover how to use this knowledge to extract keys from the device.

Poking around the secure chip

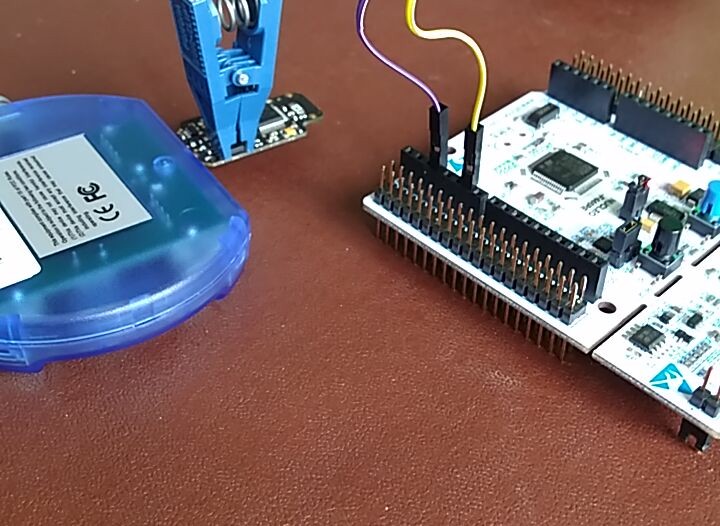

First, I needed a strategy for accessing the secure chip’s storage.

For context, I was sent a developer version of the BitBox which I am told differs from production versions in that it lacks epoxy and half of the plastic case. Unfortunately, the side of the BitBox covered by the remaining half of the plastic case happens to be where the secure chip resides, so I needed to remove the plastic case.

But life is never that simple and, since the plastic case actually forms part of the USB connector, the only solution was to utilize a hacksaw to remove the intrusive part of the case.

With that done, I slotted the BitBox and its plastic USB connector into a USB hub (USB 1.0, no less!) which held it in position so that I could maintain easy access to the secure chip.

Not wishing to risk supplying electricity through the IC test clip pictured (a POMONA SOIC Clip) – lest magic smoke appear – I connected the USB hub to the computer to power the device. The test clip was connected to an ST NUCLEO-F446RE dev board – host to my favorite embedded microcontroller family – for which it is trivial to write a driver to interface with the ATAES132A protocol.

Even before taking into consideration that the F446RE is totally overkill for this task, this equipment costs less than £20 (or $25) – making the attack incredibly inexpensive.

Long-lived encryption keys are not your friend

Now we can read from and write to the storage, it’s time to find an attack vector!

As I’ve mentioned before, the device is secured by a user-selected passphrase. If you have this passphrase, you can sign transactions or backup the private keys to the MicroSD. Without the passphrase, all you can do is factory reset the device – which wipes the existing private keys.

However, the factory reset does not wipe the AES encryption key. In fact, the same AES encryption key, originally programmed into the device in the factory, is used for the entire lifetime of the device. I’m not sure why BitBox adopted this approach but, generally, long-lived encryption keys should be avoided if possible.

And that’s where the issue comes in. If I factory reset your device – destroying your encrypted passphrase and private keys – the BitBox will encrypt my passphrase and private keys with the same encryption keys.

Since the protections are disabled on the secure chip, we can freely read and write to its storage externally to the microcontroller. As such, we have a method to change the passphrase on any BitBox, without ever knowing the existing passphrase or the AES encryption keys.

We can read the victim’s encrypted private keys from the storage using the hardware setup I described above. Then, after we factory reset the BitBox, we can set it up with our own passphrase. Finally, we can write the victim’s encrypted private keys back and we’ll have successfully changed the passphrase to one we know!

Then, we can unlock the device as per normal, except with our chosen passphrase, and steal all the funds from it!

Know Thy System

The obvious band-aid for this vulnerability is to generate a new encryption key when the device is factory reset.

As such, in response to my responsible disclosure, BitBox chose to “scramble” the encryption key by mixing random bytes in.

uint32_t i = 0;

uint8_t usersig[FLASH_USERSIG_SIZE];

uint8_t number[FLASH_USERSIG_RN_LEN] = {0};

random_bytes(number, FLASH_USERSIG_RN_LEN, 0);

flash_read_user_signature((uint32_t *)usersig, FLASH_USERSIG_SIZE / sizeof(uint32_t));

for (i = 0; i < FLASH_USERSIG_RN_LEN; i++) {

usersig[i] ^= number[i];

}

flash_erase_user_signature();

flash_write_user_signature((uint32_t *)usersig, FLASH_USERSIG_SIZE / sizeof(uint32_t));

Essentially, the code above does the following:

- The encryption key is read from flash

- The device generates random data of the same length as the encryption key

- Each byte of the encryption key is XORed with the corresponding random byte

- The new encryption key is written back to flash

This looks infallible; the XOR operation being used to mix the bytes preserves randomness, so mixing random bytes in will result in a random encryption key.

Unfortunately, if we take a look at the

implementation

for random_bytes, we quickly see a problem. BitBox managed to abstract away

the fact that random_bytes is actually calling the random number generator

on the secure chip; not generating random data on the microcontroller!

Presumably BitBox didn’t realize this when they were implementing the fix and

the fix must have not been reviewed thoroughly before they released it.

Since we can man-in-the-middle the secure chip, we are able to control the

output of random_bytes. As such, we can send back all zeroes in place of

random data and the XOR operation will leave the encryption key untouched.

Since this situation is exactly the same as prior to the fix, BitBox’s patch is useless and we can re-use the existing attack to change the passphrase.

BitBox’s second fix

Once again, I was unable to review their fix due to reasons I will discuss at the end of the article.

Nevertheless, their description did not fill me with confidence because it was a minor modification to the way the key is scrambled – rather than fixing the root cause. If a future researcher wants to explore whether the new fix is secure, I would advise them to explore not only whether the key generation code is vulnerable, but also whether the code path can be bypassed entirely.

For example, I found numerous cases where the return value of security-essential functions were not checked, allowing an attacker to break various assumptions made by the BitBox developers. For example, in some cases, you could access code paths meant for erased wallets – allowing you to change the passphrase as you would on a brand-new wallet.

But the bottom line is that, once again, this is a band-aid for a more fundamental issue: the high-security chip is not providing any security. Unfortunately, for reasons I shall discuss in the next section, the root issue cannot be fixed.

Moving forward

Despite the bounty BitBox paid me for the issues I disclosed being rather modest for the number of issues reported (citing financial issues as the rationale), I attempted to provide them with detailed information on possible fixes for all the issues. However, the bounty was definitely not high enough to warrant me urgently reviewing the fixes they have implemented, so I cannot yet vouch as to whether the above vulnerabilities have been resolved. I hope to take a look in more depth as time frees up.

Furthermore, since I found these issues from reviewing a miniscule proportion of the codebase, I imagine more issues of similar severity will be discovered in the future.

Additionally, less severe issues were discovered, such as the fact that an

attacker can man-in-the-middle the high-security chip to drop write commands to

the brute-force counter. This would enable an attacker to have an unlimited

number of attempts at brute-forcing the passphrase, without the device

resetting as advertised. Personally, my research is focused on “smoking guns”

rather than minor issues like this, but BitBox should explore fixing this or

warn users that the brute-force protection is only superficial.

EDIT: BitBox informs me that an update released yesterday should tackle this issue, and make the firmware more robust in adversarial conditions.

Also of particular note are the bizarre design decisions, such as the superfluous use of Base64 and hex-encoding throughout the embedded codebase, both for data stored in memory and for data sent via 8-bit clean transports – both contexts in which raw binary data would be far more appropriate. While these do not immediately raise security issues, complexity like this can introduce vulnerabilities or prove invaluable to attackers trying to exploit another vulnerability.

Additionally, since the high-security chip included in the BitBox cannot be reconfigured, it is largely redundant for existing hardware (its only purpose as a hardware RNG). As such, at my request, BitBox removed mentions of the chip from their website. This is very unfortunate as it was arguably the only distinguishing factor and advantage over its competitors.

BitBox did, however, argue that storing secrets on a separate chip helps prevent a “memory attack” on the MCU from accessing the private keys.

I would definitely dispute this claim as any remote memory access attack on an environment like this (lacking ASLR, or even an MPU configuration that prevents code execution from SRAM) can be trivially upgraded to a remote code execution vulnerability. Furthermore, any physical attack on the device can simply read the encrypted secrets from the secure chip.

Note that, while the lack of ASLR (address space layout randomization) – a technique that makes it more difficult for attackers to write exploits for vulnerabilities they find – is a limitation of the ARM microcontrollers used in embedded devices like the BitBox, the lack of MPU configuration is disappointing and I hope that BitBox rectify this soon.

Finally, the most important conclusion is not the quantity of security flaws found, but the nature of them. Unlike the exploits found in other hardware wallets, these were not elaborate techniques (e.g. side-channel attacks, buffer overflows or fault injections), these were elementary flaws in the high-level design of the device. I urge BitBox to seek a professional audit of their entire device (both the firmware, and the hardware design), which may help prevent issues like this arising again.

On the flip side, there may be more hope for the second version of this device, as BitBox told me that they have assigned more resources towards bringing a better-secured second version of the device to market, expecting sales to outpace those of the first version. Given that the second version will include a display, it should also remove a large amount of complexity from the design that contributed towards these vulnerabilities.

Acknowledgements

Many thanks to Josh Harvey for reviewing this post and providing countless invaluable suggestions!